|

I am currently an Applied Scientist at AWS AI Lab, focusing on Multi-turn RL for LLM Agents, and Coding Agents (e.g., Kiro, Amazon Q). I obtained my Ph.D. at Columbia University advised by Prof. Xuan Di and Prof. Elias Bareinboim. Before joining Columbia, I obtained my Master’s degree from Carnegie Mellon University (CMU) in 2020. I am generally interested in LLMs training, LLM agent, RLHF/RLEF/RLAIF, Code Generation, Causality and so on. Representative papers are highlighted. Email / CV (last updated: July 2023) / LinkedIn / Google Scholar / |

|

News

| 2024/12 - Our paper on "Causal Imitation for Markov Decision Processes: A Partial Identification Approach" got published to NeurIPS 2024. |

| 2024/08 - Our paper on LLMs and Mobility Travel Modes got published to ITSC 2024. |

| 2023/12 - Our paper (done at Amazon) on Multimodal Entity Resolution and LLMs got published to ICASSP 2024. |

| 2023/05 - Joined Amazon Artificial General Intelligence (AGI) team as an Applied Scientist summer intern. |

| 2023/01 - Our paper on "Causal Imitation Learning via Inverse Reinforcement Learning" got published to ICLR 2023 |

| 2022/08 - Our paper on decentralized traffic signal control got published to Transportation Research Part C |

| 2022/06 - Our paper on integrated safety-enhanced reinforcement learning (RL) and model predictive control (MPC) got published to Transportation Research Part C |

| 2022/05 - Our paper on "Learning Human Driving Behaviors with Sequential Causal Imitation Learning" got published to AAAI 2022 |

Selected Publications

|

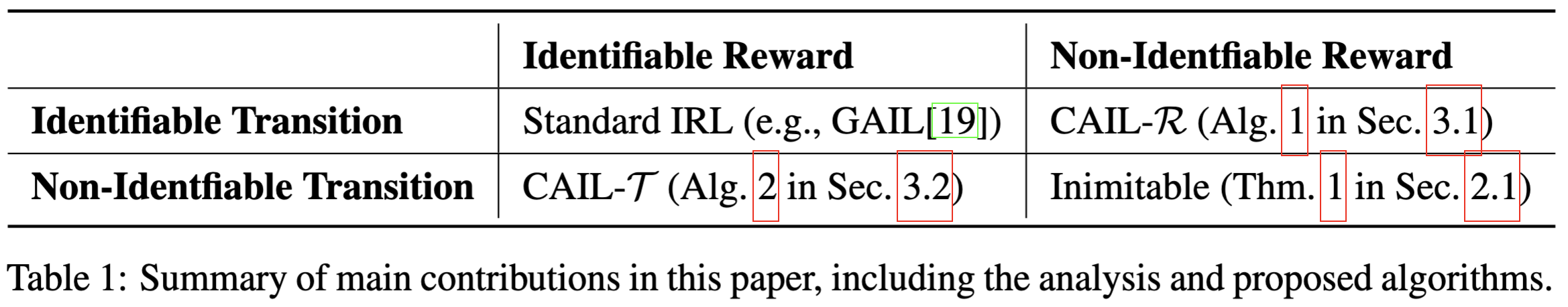

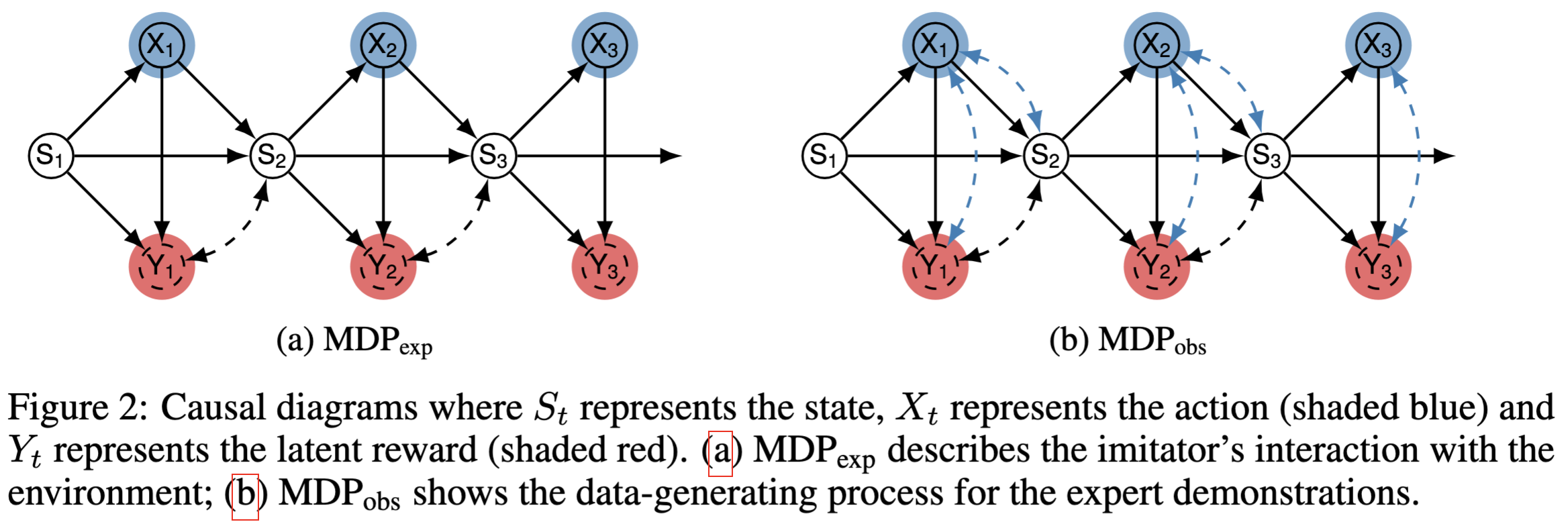

NeurIPS 2024 [paper] In this paper, we investigate robust imitation learning within the framework of canonical Markov Decision Processes (MDPs) using partial identification, allowing the agent to achieve expert performance even when the system dynamics are not uniquely determined from the confounded expert demonstrations. Specifically, we first theoretically demonstrate that when unobserved confounders (UCs) exist in an MDP, the learner is generally unable to imitate expert performance. |

|

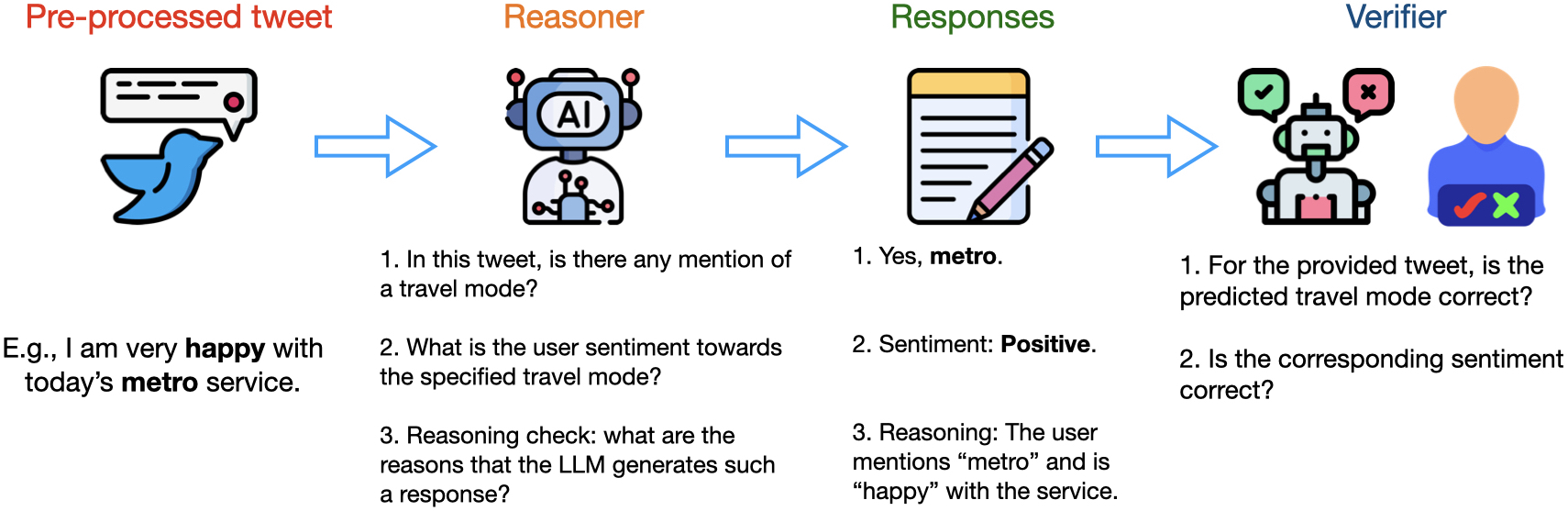

IEEE ITSC 2024 [paper] In this study, we introduce a novel methodological framework utilizing Large Language Models (LLMs) to infer the mentioned travel modes from social media posts, and reason people's attitudes toward the associated travel mode, without the need for manual annotation. We compare different LLMs along with various prompting engineering methods in light of human assessment and LLM verification. |

|

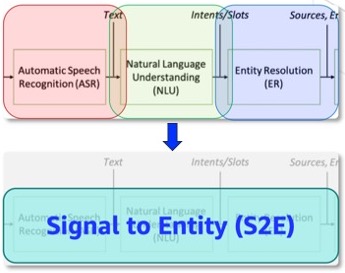

2024 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2024 [paper] Traditional cascading Entity Resolution (ER) pipeline suffers from propagated errors from upstream tasks. We address this issue by formulating a new end-to-end (E2E) ER problem, Signal-to-Entity (S2E), resolving query entity mentions to actionable entities in textual catalogs directly from audio queries instead of audio transcriptions in raw or parsed format. |

|

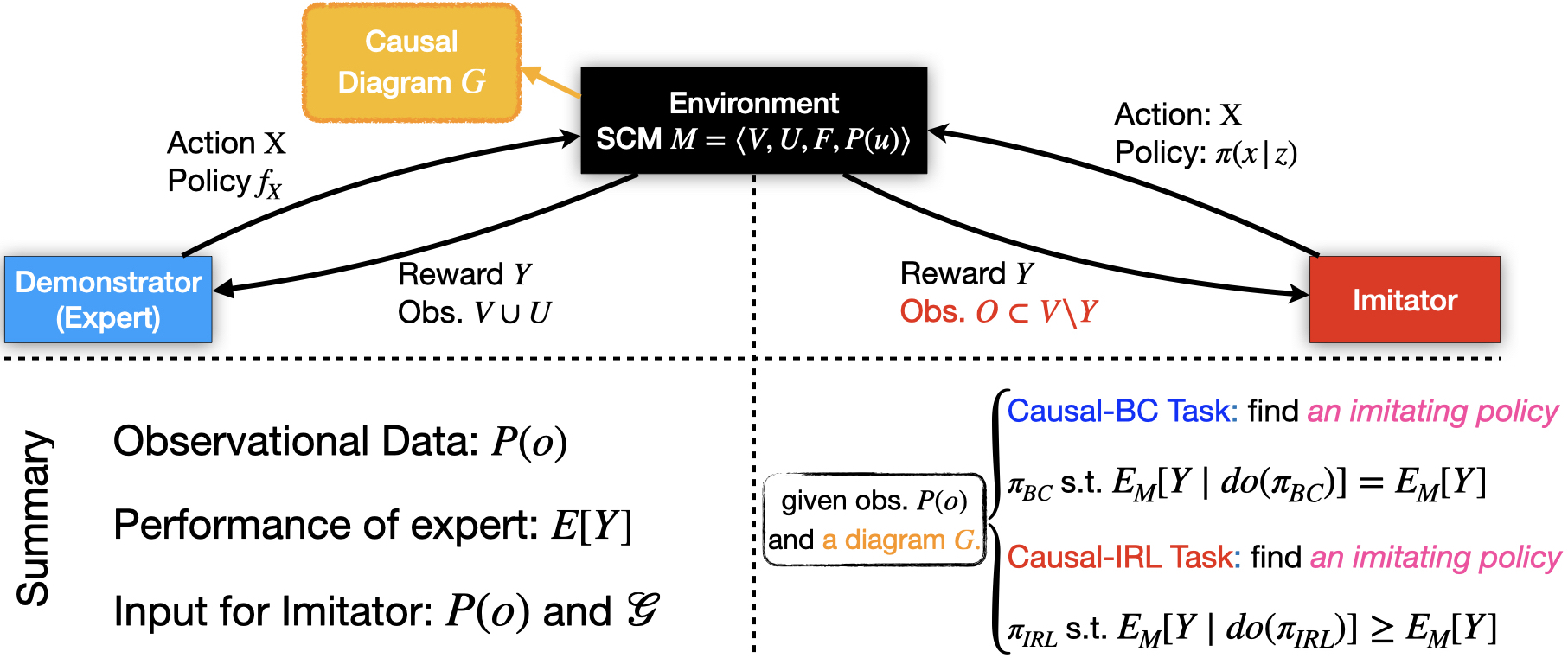

The Eleventh International Conference on Learning Representations, ICLR 2023 [paper] This paper has 2 key contributions. First, the paper analyzes structural conditions on the causal model under which learning the expert policy is possible in the presence of unobserved confounding. Second, the authors further exploit knowledge of the graphical structure to extend IRL algorithms such as GAIL or MWAL to the confounded settings. |

|

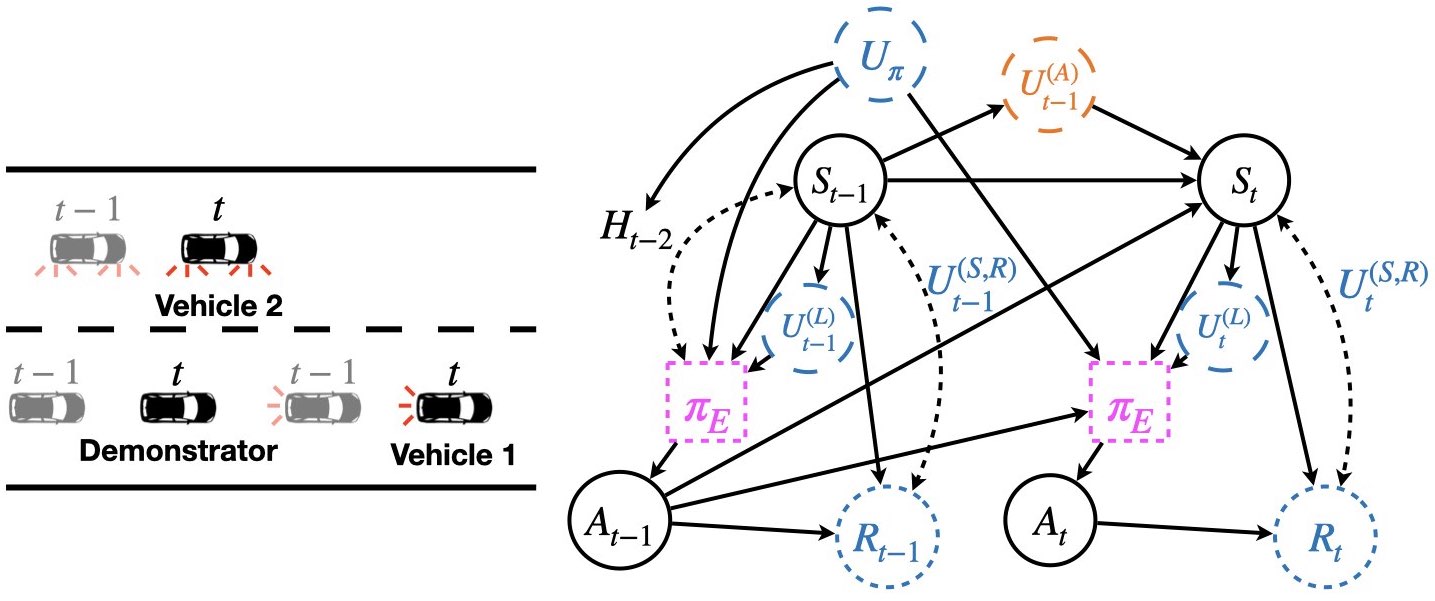

The 36th AAAI Conference on Artificial Intelligence, AAAI 2022 [paper] [code] We develop a sequential causal template that generalizes the default MDP settings to one with Unobserved Confounders (MDPUC-HD). |

|

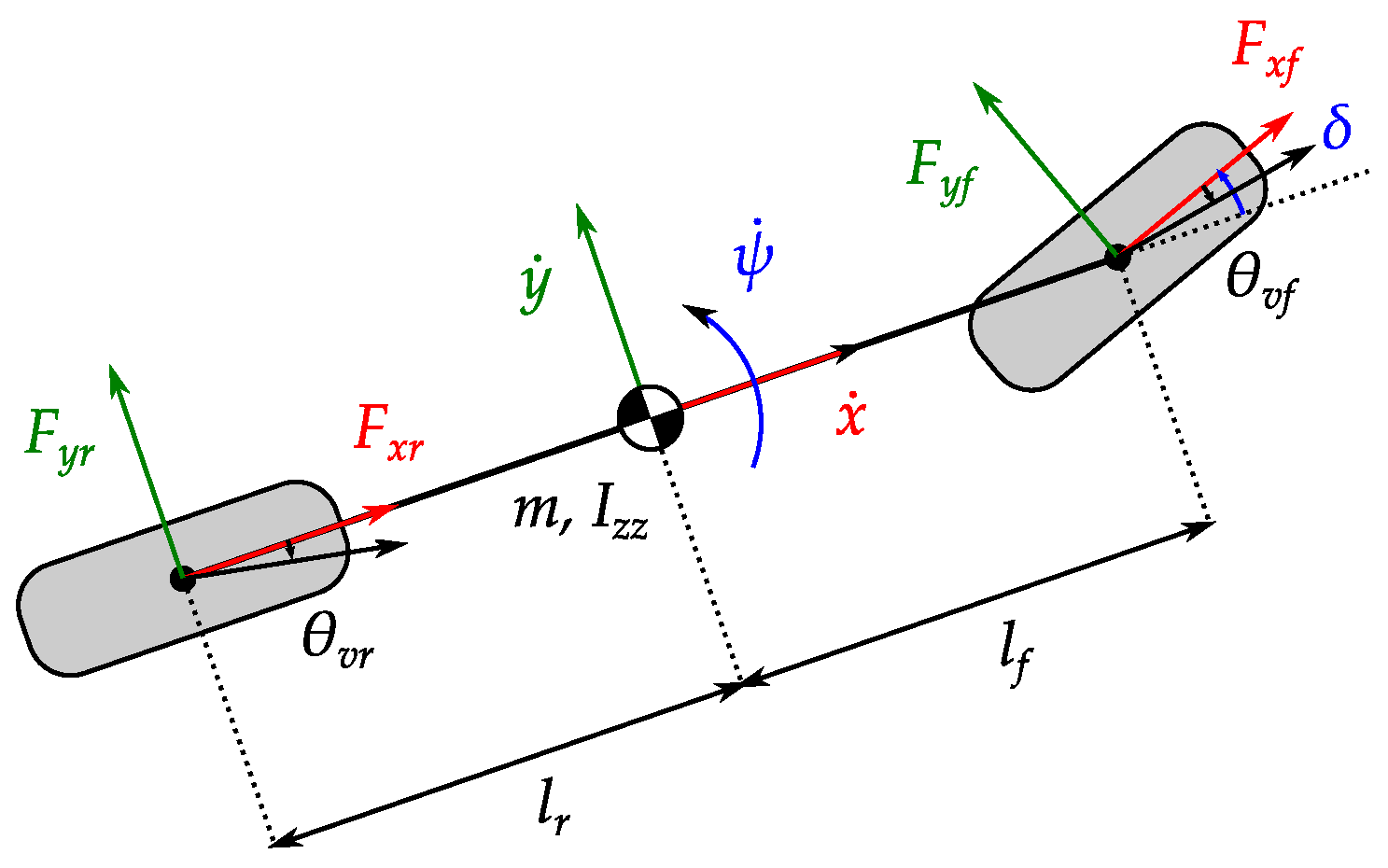

Transportation Research Part C: Emerging Technologies, 2022 [paper] This paper develops an integrated safety-enhanced reinforcement learning (RL) and model predictive control (MPC) framework for autonomous vehicles (AVs) to navigate unsignalized intersections. |

|

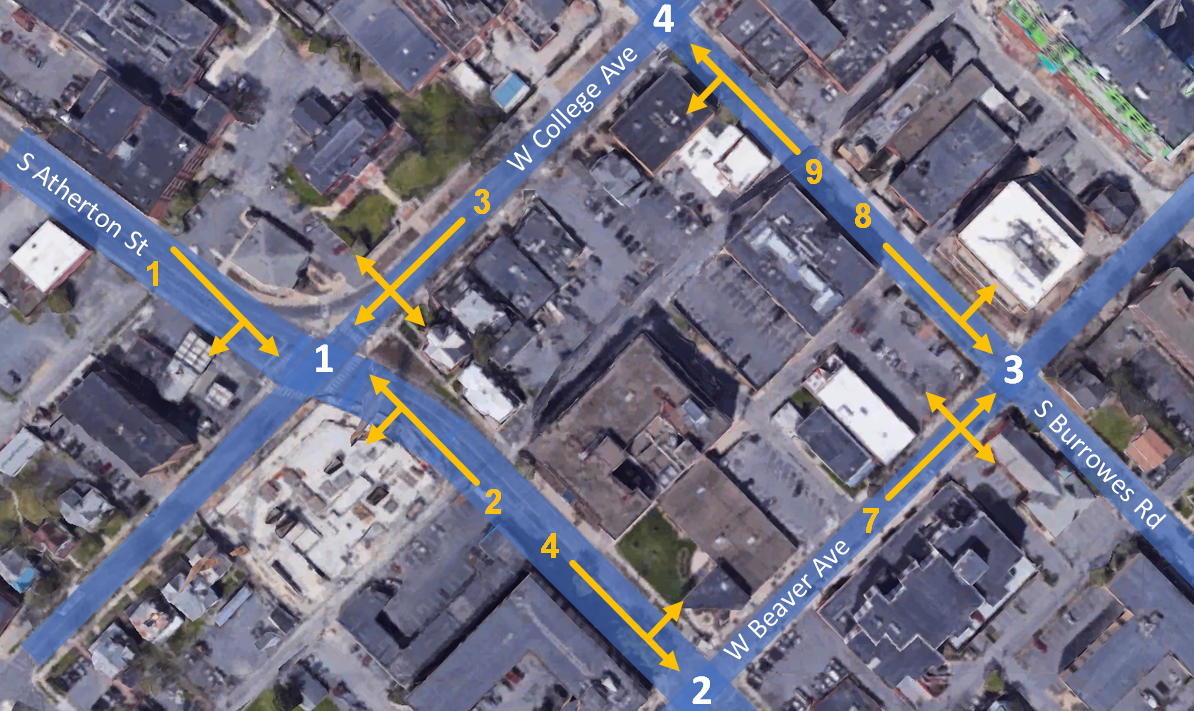

Transportation Research Part C: Emerging Technologies, 2022. [paper] This paper develops a decentralized reinforcement learning (RL) scheme for multi-intersection adaptive traffic signal control (TSC), called “CVLight”, that leverages data collected from connected vehicles (CVs). |